Cohomology Intuitively

01 Mar 2021 - Tags: cohomology-intuitively , sage , featured

So I was on mse the other day…

Anyways, somebody asked a question about finding generators in cohomology groups. I think understanding how to compute the generators is important, but it’s equally important to understand what that computation is doing. Regrettably, while there’s some very nice visual intuition1 for homology classes and what they represent, cohomology groups tend to feel a bit more abstract.

This post is going to be an extension of the answer I gave on the linked question. Cohomology is a big subject, and there were a lot of things that I wanted to include in that answer that I didn’t have space for. A blog post is a much more reasonable setting for something a bit more rambling anyways. That said, everything contained in that answer will also be discussed here, so it’s far from prerequisite reading.

In particular, we’re going to go over Simplicial Cohomology2, but we’ll steal language from De Rham Cohomology, and our first example will be a kind of informal cohomology just to get the idea across.

There’s a really nice example that Florian Frick gave when I took his algebraic topology class, and it was such good pedagogy I have to evangelize it. The idea is to study simplicial cohomology for graphs – it turns out to say something which is very down to earth, and we can then view cohomology of more complicated simplicial complexes as a generalization of this.

Graphs are one of very few things that we can really understand, and so using them as a case study for more complex theorems tends to be a good idea. As such, we’ll study what cohomology on graphs is all about, and there will even be some sage code at the bottom so you can check some small cases yourself!

With that out of the way, let’s get started ^_^

First things first. Let’s give a high level description of what cohomology does for us.

Say you have a geometric object, and you want to define a function on it. This is a very general framework, and “geometric” can mean a lot of different things. Maybe you want to define a continuous function on some topological space. Or perhaps you’re interested in smooth functions on a manifold. The same idea works for defining functions on schemes as well, and the rabbit hole seems to go endlessly deep!

For concreteness, let’s say we want to define a square root function

on the complex plane. So our “geometric object” will be

It’s often the case that you know what you want your function to do somewhere (that’s why we want to define it at all!), and then you would like to extend that function to a function defined everywhere.

For us, then, we know we want

This is an arbitrary choice, but it certainly seems like a natural one.

We now want to extend

It is also often the case that the continuity/smothness/etc. constraint means that there’s only one way to define your function locally. So it should be “easy” (for a certain notion of easy) to do the extension in a small neighborhood of anywhere it’s already been defined.

So we know that

Well, the real part of

So we’re forced into defining

However, sometimes the geometry of your space prevents you from gluing all of these small easy solutions together. You might have all of the pieces lying around to build your function, but the pieces don’t quite fit together right.

As before, we know

If we want to extend this continuously from

But now we can go from

And lastly, we go from

Uh oh.

Obviously the above argument isn’t entirely rigorous. That said, it does a good job outlining what problem cohomology solves. We had only one choice at every step, and at every step nothing could go wrong. Yet somehow, when we got back where we started, our function was no longer well defined. We thus come to the following obvious question:

If you have a way to solve your problem locally, can we tell if those local solutions patch together to form a global solution?

It turns out the answer is yes! Our local solutions come from a global solution exactly when the “cohomology class” associated to our function vanishes3.

There’s a bit of a zoo of cohomology theories depending on exactly what kinds

of functions you’re trying to define4. They all work in a similar way, though:

your local solutitions piece together exactly when their cohomology class is

De Rham Cohomology makes this precise by looking at certain differential equations which can be solved locally. Then the cohomology theory tells us which differential equations admit a global solution. In this post, though, we’re going to spend our time thinking about Simplicial Cohomology. Simplicial Cohomology doesn’t have quite as snappy a description, but it’s more combinatorial in nature, which makes it easier to play around with.

Actually setting up cohomology requires a fair bit of machinery, so before go through the formalities I want to take a second to detail the problem we’ll solve.

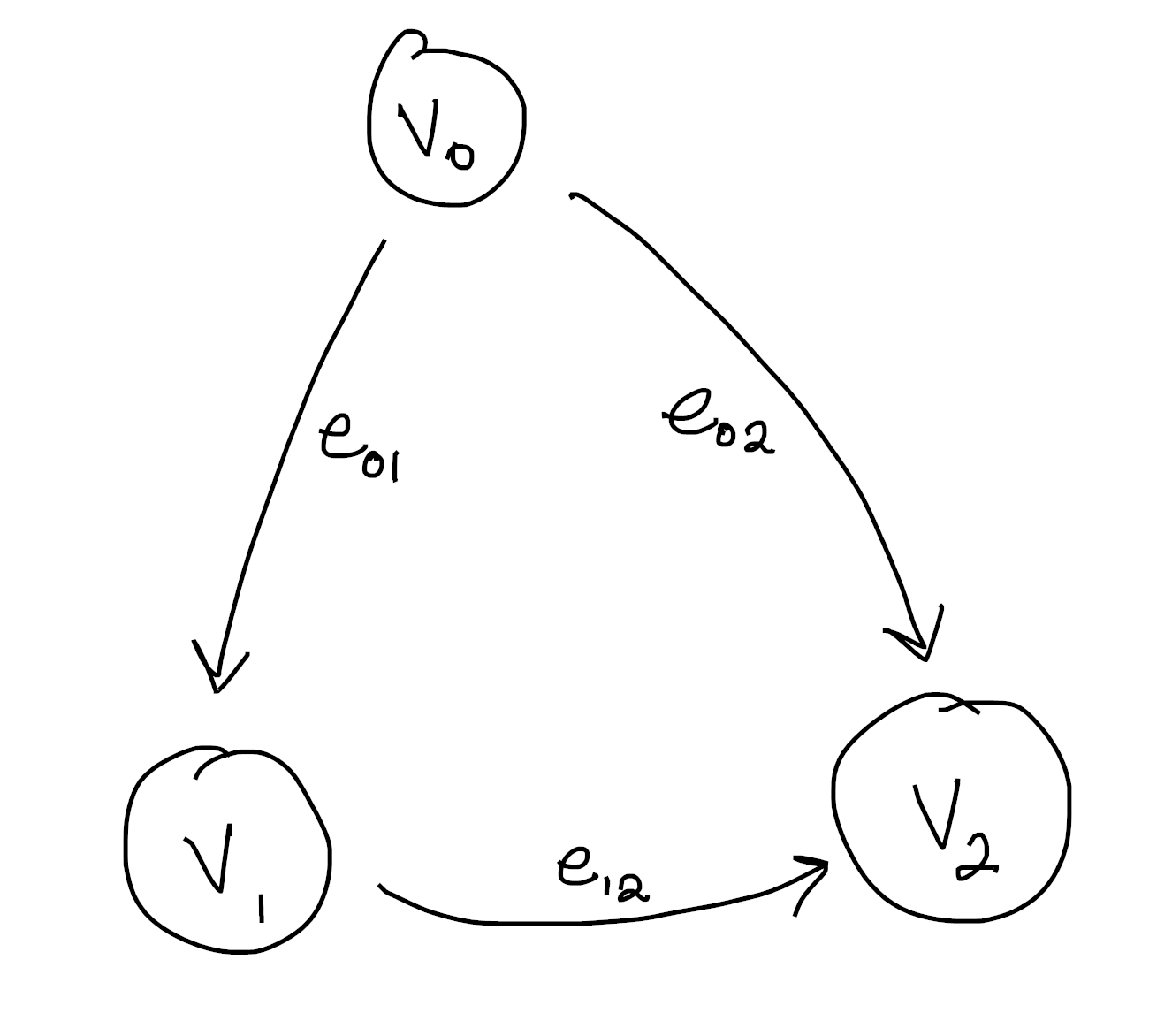

Take your favorite graph, but make sure you label the vertices. My favorite graph (at least for the purposes of explanation) is this one:

Notice the edges are always oriented from the smaller vertex to the bigger one.

This is not an accident, and keeping a consistent choice of orientation is

important for what follows. The simplest approach is to order your vertices,

then follow the convention of

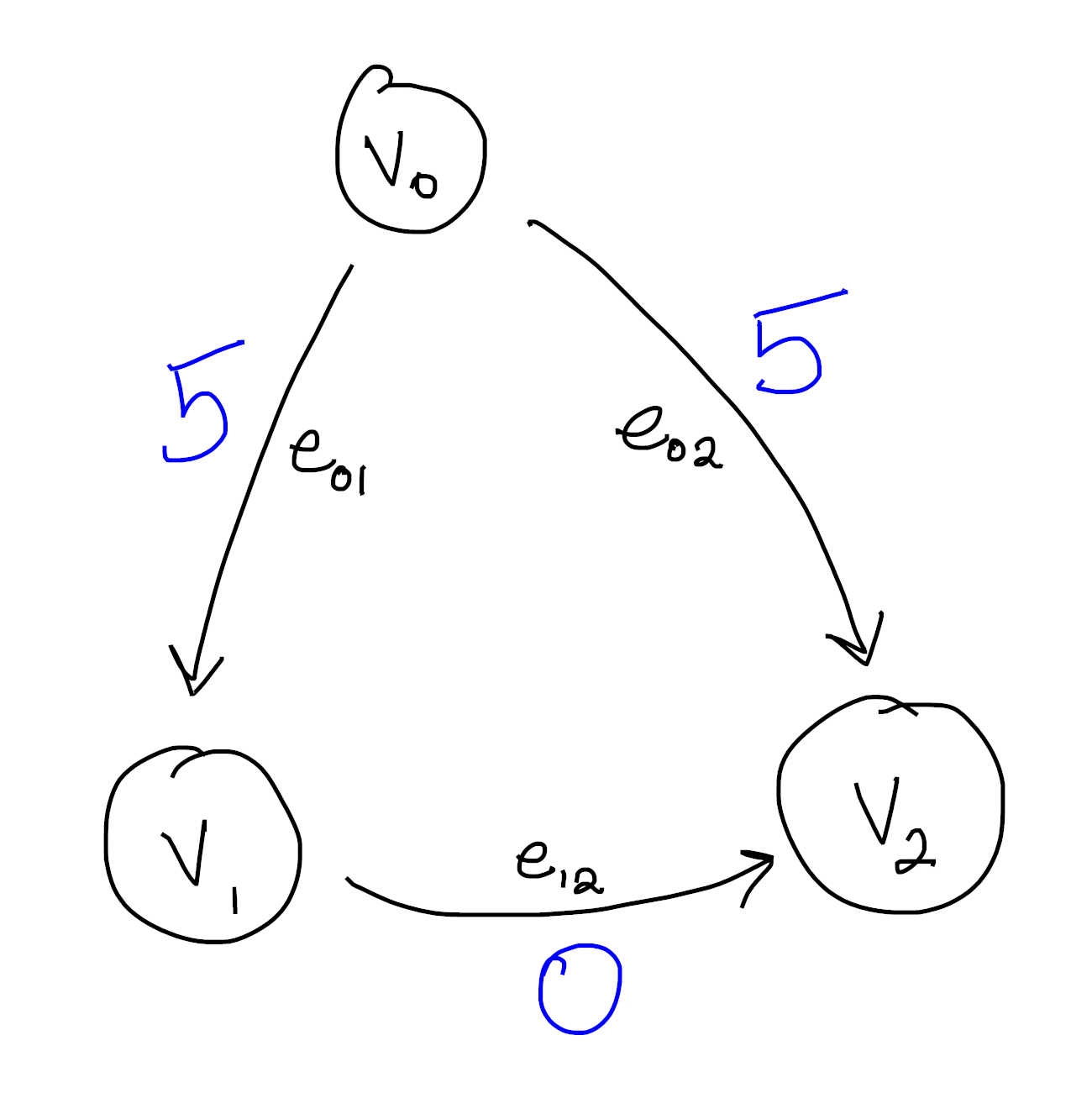

Now our problem will be to “integrate” a function defined on the edges to one defined on the vertices. What do I mean by this? Let’s see some concrete examples:

Here we see a function defined on the edges. Indeed, we could write this more formally as

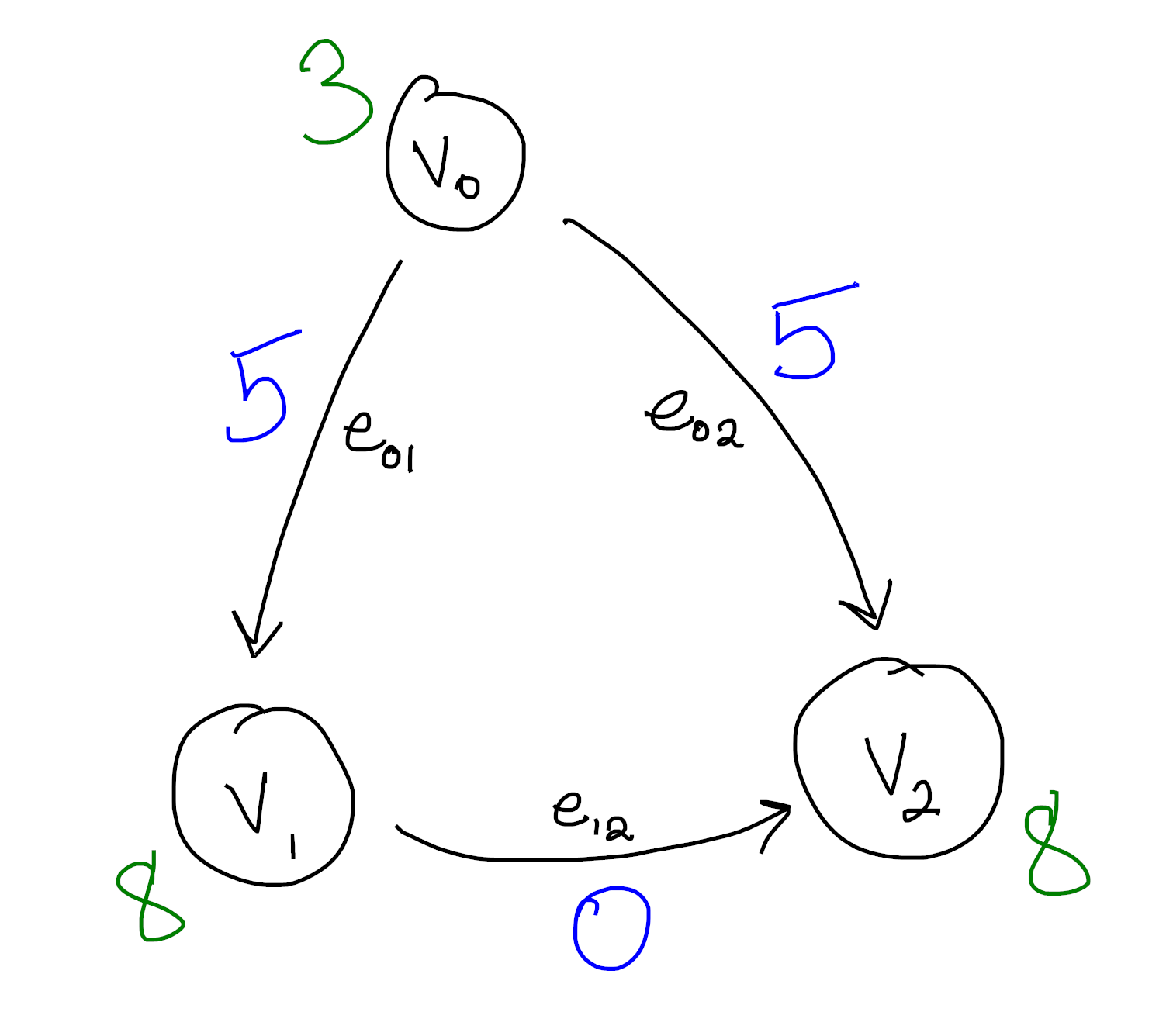

The goal now is to find a function on the vertices whose difference along each edge agrees with our function. This is what I mean when I say we’re “integrating” this edge function to the vertices. It’s not hard to see that the following works:

Again, if you like symbols, we can write this as

Then we see for each edge

As some more justification, notice this obeys a kind of “fundamental theorem

of calculus”: If you want to know the total edge values along some path,

As a (fun?) exercise, you might try to formulate and prove an analogue of the

other half of the fundamental theorem of calculus. That is, can you formulate

a kind of “derivative”

For (entirely imaginary) bonus points, you might try to come up with a

parallel between edge functions of the form

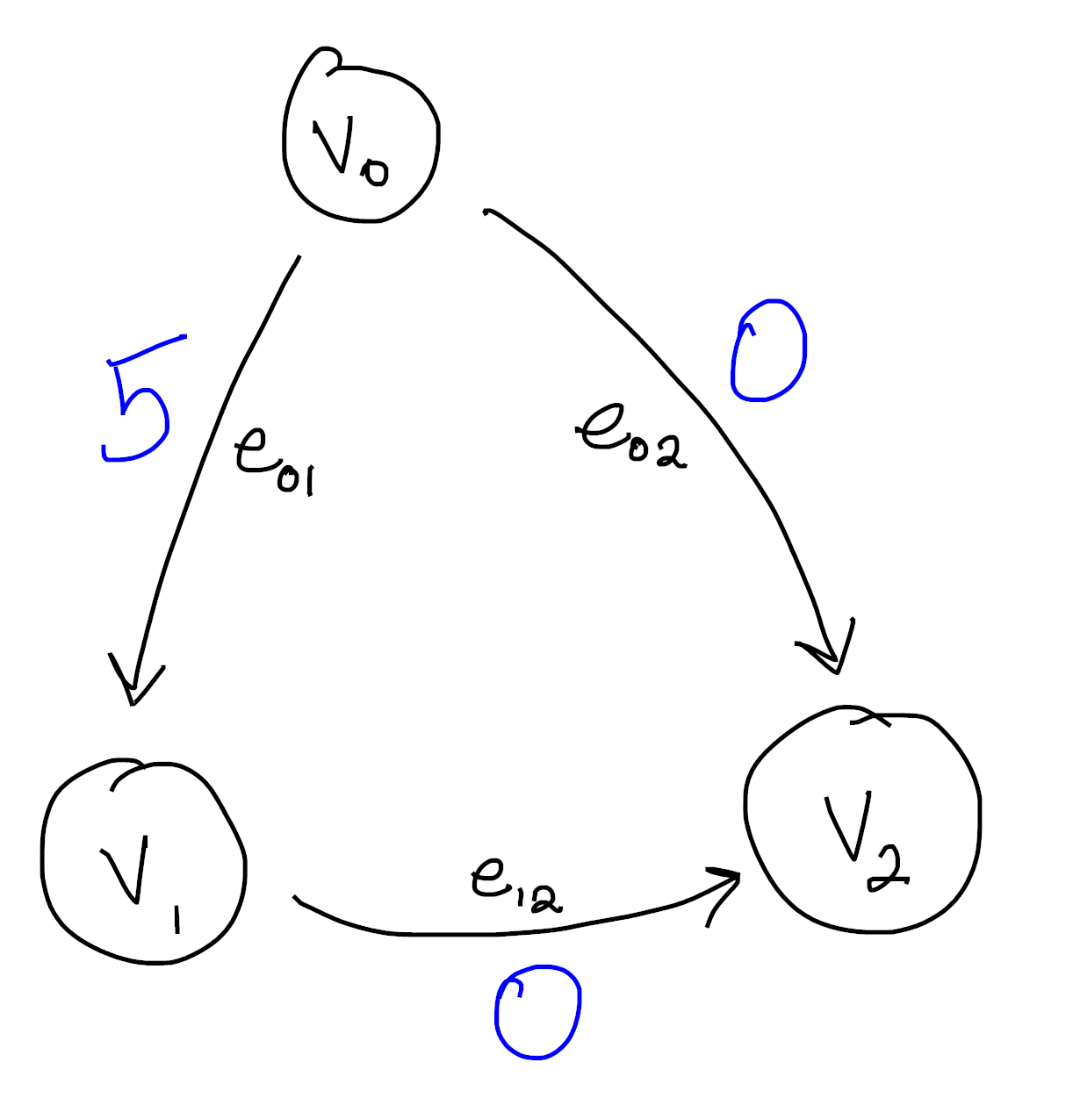

Let’s look at a different function now:

You can quickly convince yourself that no matter how hard you try, you can’t integrate this function. There is no antiderivative in the sense that no function on the vertices can possibly be compatible with our function on the edges.

After all, say we assign

This should feel at least superficially similar to the

As an aside, you can see that the problem comes from the fact that our graph has a cycle in it. Can you show that, on an acyclic graph, every edge function can be integrated to a function on the vertices?

We will soon see that the functions which can’t be integrated are (modulo an equivalence relation) exactly the cohomology classes. So the presence of a function which can’t be integrated means there must be a cycle in our graph, and it is in this sense that cohomology “detects holes”.

This is entirely analogous to the fact that every (irrotational) vector field on a simply connected domain is conservative. It seems the presence of some “hole” is the reason some functions don’t have primitives.

Ok, so now we know what problem we’re trying to solve. When can we find an antiderivative for one of these edge functions? The machinery ends up being a bit complicated, but that’s in part because we’re working with graphs, which are one dimensional simplicial complexes. This exact same setup works for spaces of arbitrary dimension, so it makes sense that it would feel a bit overpowered for our comparatively tiny example.

First things first, we look at the free abelian groups generated

by our

For the example from before, that means

which are both isomorphic to

Second things second. We want to connect these two groups together in a way

that reflects the combinatorial structure. We do this with the

Boundary Map

So for instance,

Now our groups assemble into a Chain Complex:

The extra groups

There’s actually a technical condition to be a chain complex that’s

automatically satisfied for us because our chain only has one interesting

“link”. Given an

As a quick exercise:

What is the boundary

What about

So we know that elements of

We’re now looking at all (linear) functions from

Moreover, our boundary operator

Moreover, our notation

where

Similarly

If you haven’t seen this before, you should convince yourself that it’s true. Remember that the transpose of a matrix has something to do with dualizing.

Moreover, you should check that a function

We’re in the home stretch! The second half of that box alludes to

something important: A function

Since the only map

Then we define7

Since there are no two dimensional faces,

This says the elements of

Moreover, the basis of

If we put

What is the dimension of

We’ve spent some time now talking about what cohomology is. But again, part of its power comes from how computable it is. Without the exposition, you can see it’s really a three step process:

-

Turn your combinatorial data into a chain complex by taking free abelian groups and writing down boundary matrices

-

Dualize by hitting each group with

-

Compute the kernels and images of

Steps

Since it’s so computable, and the best way to gain intuition for things is to work through examples, I’ve included some code to do just that!

Enter a description of a graph, and then try to figure out what you think the cohomology should be.

See if you can find geometric features of your graph which make the dimension obvious! If you want a bonus challenge, can you guess what the generators will be? Keep in mind there’s lots of generating sets, so you may get a different answer from what sage tells you even if you’re right.

You might also try to implement the algorithm we described yourself, at least for simple cases like graphs. You can then check yourself against the built in sage code below!

xxxxxxxxxx# Write the edges in the box. # You can add isolated vertices by including # an 'edge' with only one vertexdef _(Simplices = input_box([["a"],["b","c"],["c","d"],["b","d"]], width=50), auto_update=False): show("The graph is:") S = SimplicialComplex(Simplices) show(S.graph()) # It looks like there's no builtin way to draw complexes... show("The chain complex is:") # we did it over the reals in the post, # but if we use the reals here, sage will # print 1.00000000000000 instead of 1... # so we're using the rationals instead C = S.chain_complex(base_ring=QQ) # mathjax uses its own font and I'm too lazy to change it # but it's not monospace, so the ascii_art looks silly # when we `show` it... # the solution is to print it instead, since I have # control over non-mathjax fonts. # but printing doesn't flush the output buffer, so # things show up in a silly order! # we can fix this by manually flushing the buffer ourselves. # this means the cell complexes are going to be left-aligned, though # which we'll just have to deal with. print(ascii_art(C)) sys.stdout.flush() show("Which dualizes to:") Cdual = C.dual() print(ascii_art(Cdual)) sys.stdout.flush() show("So the cohomology is:") # the cohomology of the original complex is # exactly the homology of the dual complex. H1 = Cdual.homology(deg=1,generators=True) show(QQ^(len(H1))) # Remember, the outputs here represent functions! # The entry in position i is the value that our # function assigns edge i show("With generators:") for g in H1: show(g[1].vector(1))-

See, for instance, this wonderful series by Jeremy Kun, and even the wikipedia page. The basic idea is that homology groups correspond to “holes” in your space. These correspond to subsurfaces that aren’t “filled in”. That is, they aren’t the boundary of another subsurface. This is where the “boundary” terminology comes from. ↩

-

I know this is a link to simplicial homology, but there’s no good overview page (at least on the first page of google) for simplicial cohomology. It’s close enough, though, especially since we’re going to be spending a lot of time talking about simplicial cohomology in this post. ↩

-

If you’ve heard of sheaves before, this is also why we care about sheaves! They are the right “data structure” for keeping track of these “locally defined functions” that we’ve been talking about. ↩

-

We can tell we’re onto something important, though, because for nice spaces, all the different definitions secretly agree! Often when you have a topic that is very robust under changes of definition, it means you’re studying something real. We see a similar robustness in, for instance, the notion of computable function. There’s at least a half dozen useful definitions of computability, and it’s often useful to switch between them fluidly to solve a given problem. Analogously, we have a bunch of definitions of cohomology theories which are known to be equivalent in many contexts. It’s similarly useful to keep multiple in your head at once and use the one best suited to a given problem. ↩

-

For

-

Oftentimes you’ll see this written as

-

In general, if we have a complex