Hilbert Spaces

15 Sep 2021 - Tags: analysis-qual-prep

Hilbert Spaces are banach spaces whose norms come from an inner product. This is fantastic, because inner product spaces are a very minimal amount of structure for the amount of geometry they buy us. Beacuse of the new geoemtric structure, many of the pathologies of banach spaces are absent from the theory of hilbert spaces, and the rigidity of the category of hilbert spaces (there is a complete cardinal invariant describing hilbert spaces up to isometric isomorphism) makes it extremely easy to make an abstract hilbert space concrete. Moreover, this “concretization” is exactly the genesis of the fourier transform!

With that introduction out of the way, let’s get to it!

First, a word on conventions. I’ll be working with the inner product

Riesz Representation Theorem

If

is a conjugate linear isometry

In particular, every linear functional on a hilbert space is of the

form

Since we write function application on the left2, it makes sense for the

associated functional

Obviously this doesn’t matter at all, but I feel the need to draw attention to it3.

There are two key examples of hilbert spaces:

-

-

A special case of the above, if

In fact, as we will see, every hilbert space is isometrically isomorphic to

some

First, though, why should we care about inner products? How much extra structure does it really buy us? The answer is: lots!

As is so often the case in mathematics, theorems in a concrete setting become definitions in a more general setting, and once we do this much of the intuition for the concrete setting can be carried over. For us, then, let’s see what we can do with inner products.

First, it’s worth remembering that an inner product defines a norm

so every inner product preserving function also preserves the norm.

It turns out we can go the other way as well, and the polarization identity lets us write the inner product in terms of the norm4. This is fantastic, as it means any norm preserving function automatically preserves the inner product!

We can also define the angle between to vectors by

and when

Of course, once we have orthogonality, we have a famous theorem from antiquity:

The Pythagorean Theorem

If

As a quick exercise, you should prove the Law of Cosines:

For any

Once we have orthogonality, we also have the notion of orthogonal complements.

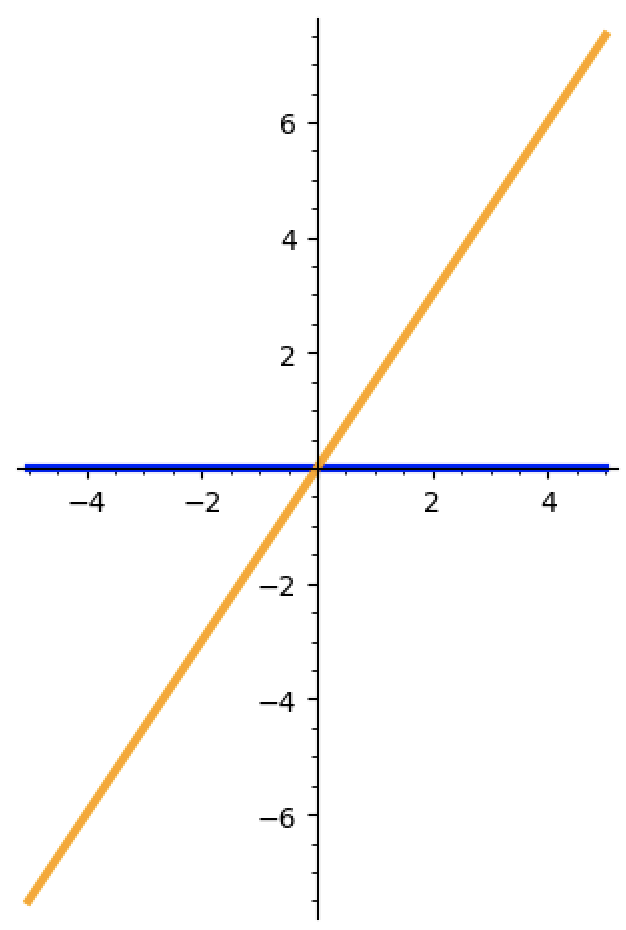

You might remember from the finite dimensional setting that there’s (a priori)

no distinguished complement to a subspace. For instance, any two distinct

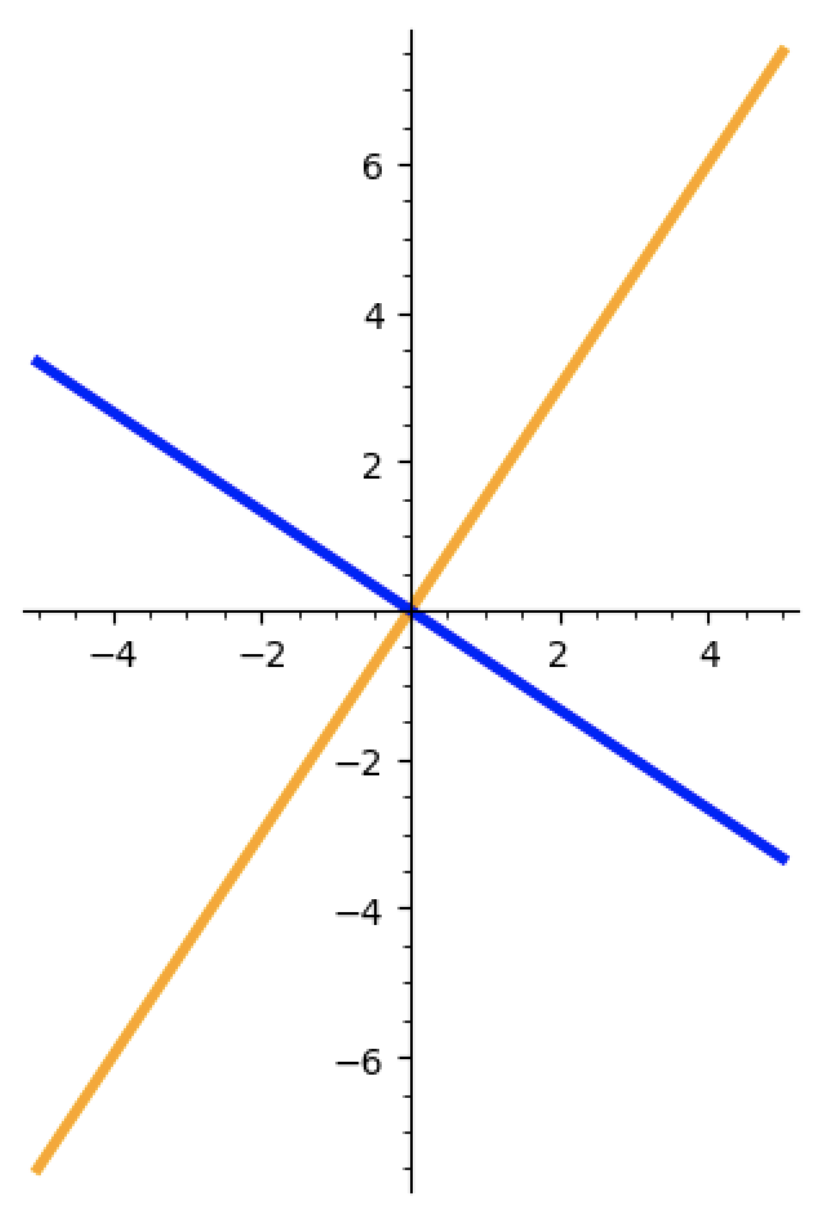

The blue subspace is one of many complements of the orange, so why should we choose it over anything else? Instead, we can use the inner product (in particular the notion of orthogonality) to choose a canonical complement:

Why is this complement canonical? Because it is the unique complement so that every blue vector is orthogonal to every orange vector.

In the banach space setting (where we don’t have access to an inner product) recall there are subspaces which have no complement. In the hilbert space setting this problem vanishes – the orthogonal complement always exists, and is a complement5!

If

so if

You might also remember from the finite case that we can find particuarly nice bases for inner product spaces (called orthonormal bases). It’s then natural to ask if there’s an analytic extension of this concept to the hilbert space setting.

The answer, of course, is “yes”:

If

-

(Completeness) If

-

(Parseval’s Identity)

-

(Density)

If any (and thus all) of the above are satisfied, we say that

Moreover, every hilbert space admits a hilbert basis!

These conditions are very foundational to hilbert space theory, and are worth remembering. The way that I like to remember is

Let

The map

Here Parseval’s Identity is tells us that this map is isometric,

and Density tells us that the obvious inverse map

In fact, one can show that the size of a hilbert basis is a complete

invariant for hilbert spaces. That is, any two hilbert bases for

That’s a lot of information about hilbert spaces in the abstract. But why should we care about any of this? Let’s see how to solve some problems using this machinery!

Let’s work with

A hilbert space is separable if and only if it has a countable

hilbert basis. This is nice since most hilbert spaces arising in practice

(including

Historically, it was a very important problem to understand the convergence of “fourier series”. That is, if we define

(which we, with the benefit of hindsight, recognize as

when is it the case that we can recover

In particular, for “nice” functions

This problem was fundamental for hundreds of years, with Fourier publishing

his treatise on the theory of heat in

The language of

Carleson’s theorem is famously hard to prove, be we can get a partial solution for free using the theory of hilbert spaces!

For any

This is exactly the “density” part of the equivalence above!

With some work, one can show that

Hilbert spaces are also useful in the world of ergodic theory.

Say we have a function

A result in hilbert space theory tells us we aren’t off base!

Von Neumann Ergodic Theorem

If

where

This gives us the Mean Ergodic Theorem as a corollary!

If

where

Alright! We’ve seen some of the foundational results in hilbert space theory, and it’s worth remembering our techniques from the banach space world still apply. Hilbert spaces are very common in analysis, with application in PDEs, Ergodic Theory, Fourier Theory and more. The ability to basically do algebra as we would expect, and leverage our geometric intuition, is extremely useful in practice.

Next time, we’ll give a quick tour of applications of the

Baire Category Theorem, and then it’s on to the Fourier Transform

on

The qual is a week from today, but I’m starting to feel better about the material. This has been super useful in organizing my thoughts, and if I’m lucky, you all might find them helpful as well.

If you have been, thanks for reading! And I’ll see you next time ^_^.

-

The proof is pretty easy once we have a bit more machinery.

Take a functional

-

Though we really shouldn’t, it seems to be unchangeable at this point.

I tried switching over a few years ago, but it made communication terribly confusing. Monomorphisms, for instance, cancel on the left with the usual notation, but cancel on the right with the other notation. I tried to get around this by remembering monos “cancel after” and epis “cancel before”, but I got horribly muddled up anytime I tried to talk with another mathematician.

Teaching and communication are extremely important to me, so I sacrificed my morals and went back to functions on the left. ↩

-

And to evangelize. Until we switch over to functions on the right, this really is the correct convention.

So I suppose the “mathematician” convention is correct, but inconsistent with how we (incorrectly) write function application on the left, while the “physicist” convention is incorrect, but consistent with the rest of our (incorrect) notation… What a world to live in :P.

If you happen to have a convincing argument for using the other convention, though, I would love to hear it! ↩

-

It’s worth taking a moment to ask yourself why we can’t use polarization to turn every normed space into an inner product space. The answer has to do with the parallelogram law. ↩

-

In fact, I mentioned this last time as well, but this feature characterizes hilbert spaces! If every subspace of a given banach space is complemented, then that banach space is actually a hilbert space! ↩

-

This does not mean the terms are absolutely summable! ↩

-

This isomorphism is in the category of hilbert spaces and unitary maps, so it is automatically an isometry. ↩

-

The natural domain of a periodic function really is

There is a notion of fourier transform on arbitrary (locally compact) abelian groups. This “pontryagin duality” swaps “compactness” and “discreteness” (amongst other things) and we see this already.

Abstract harmonic analysis (the branch of math that studies this duality theory) seems really interesting, and I want to learn more about it when I have the time. It seems to have a lot of connections to representation theory, which is also on my to-learn list. ↩

-

The case of

If

-

This idea of averaging to land on a fixed point is both common and powerful. It is the key idea behind Maschke’s Theorem and many other results. I’ve actually been meaning to write a blog post on these kinds of averaging arguments, but I haven’t gotten around to it yet… ↩