Some Questions about the Mathematics of Hitomezashi

19 Dec 2021 - Tags: sage

A little while ago I made a post with some sage code that makes pretty pictures based on a certain Japanese stitching based off of Hitomezashi Sashiko. It’s fun to play with, and I love making pretty pictures, but it also raises some interesting combinatorial questions! Questions which, unfortunately, I’m not sure how to answer. This post is about some partial progress that I’ve made, and also shows how I’ve been using sage to develop conjectures for these problems. I’ll end with the statement of a few of these conjectures, and if I’m lucky some readers will have ideas for attacking these problems!

First things first, let’s give a slightly more precise definition of the

objects of interest. To each pair of binary strings

We have vertices

Intuitively, we alternate putting a wall between adjacent cells of a grid.

A

As a small example, let’s consider the strings

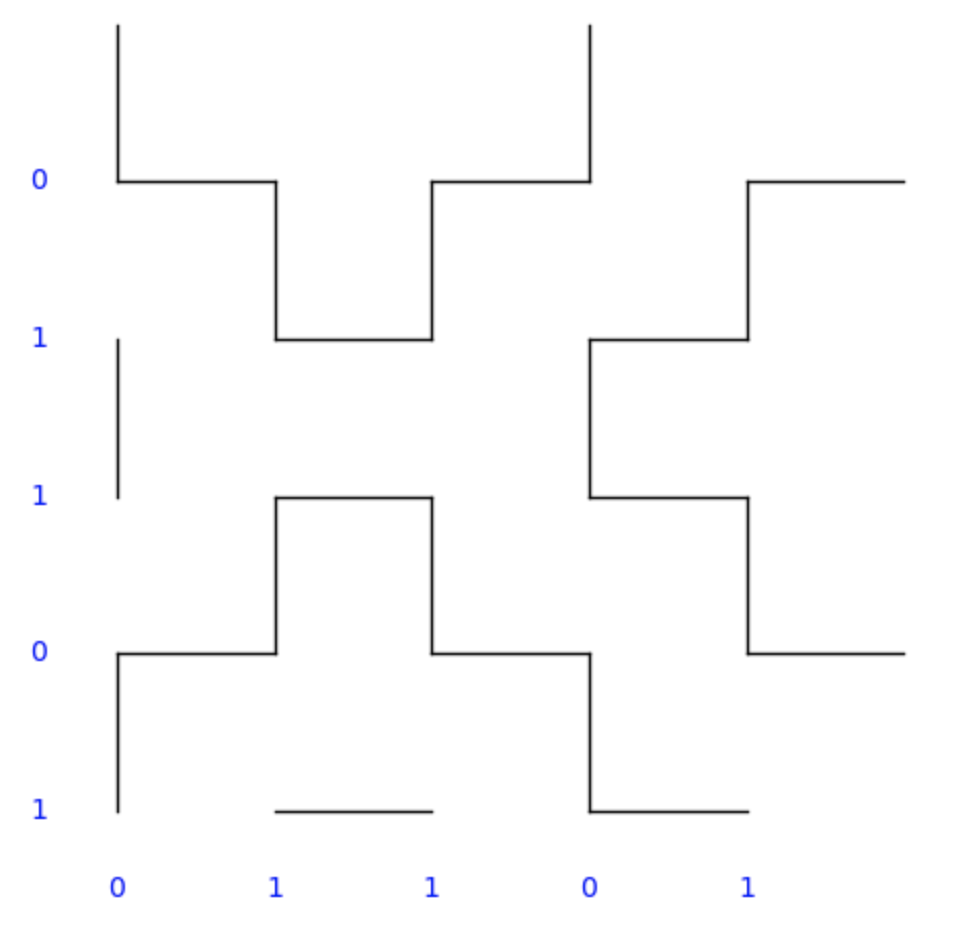

Then the resulting stitch pattern (as drawn by the code in the previous post) is

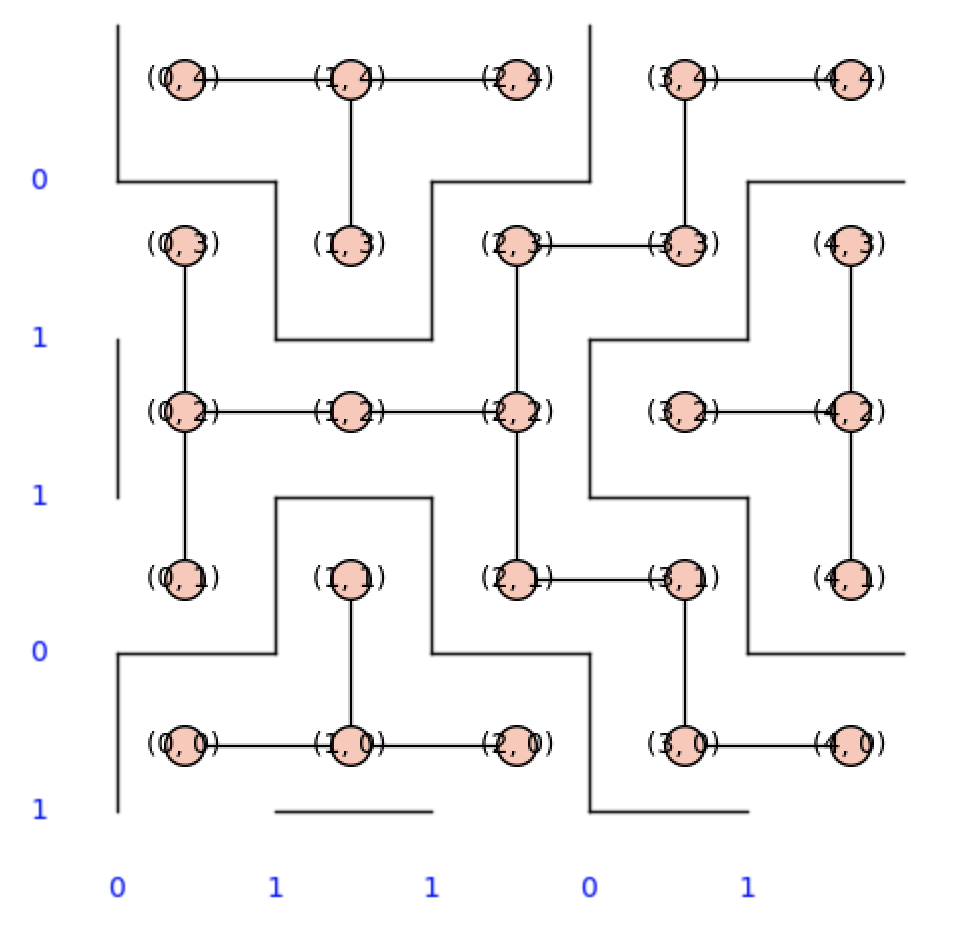

and the graph looks like this (overlayed on it):

(Notice the “regions” in the picture are exactly connected components of the graph)

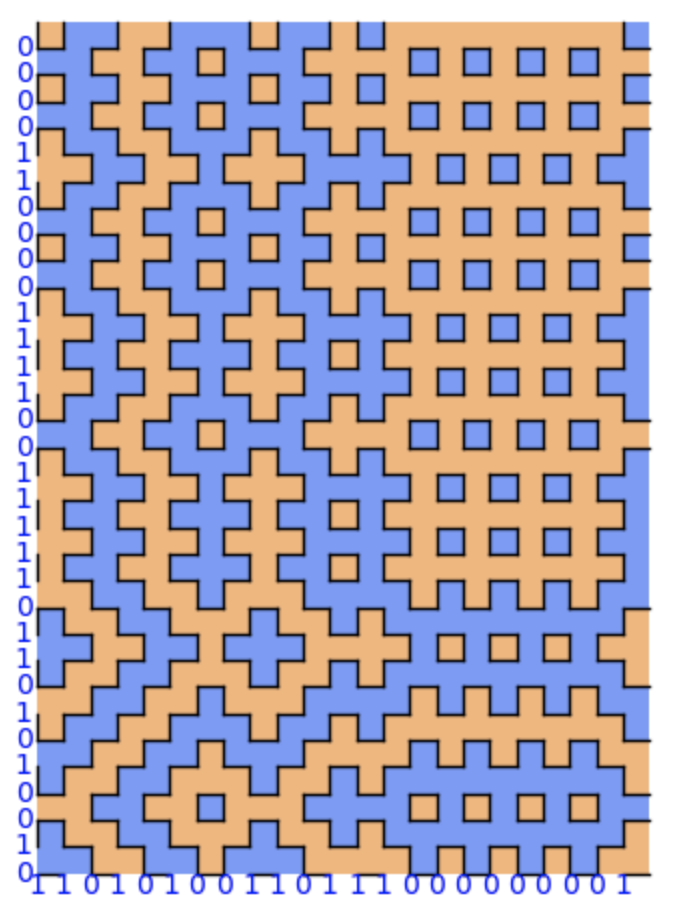

Now there’s a lot of of questions we can ask about these pictures. Let’s build a larger one to make things a bit more obvious:

Say

then our picture looks like this:

and already there’s some obvious questions to ask.

-

How many

-

How many regions do we expect to get in total? And how big do we expect the regions to be?

There are other natural questions as well, but these are interesting to me because I can solve them, and because I can’t solve them (respectively).

You should play around with making your own hitomezashi pictures, and try to come up with your own questions about them!

Feel free to leave any conjectures or work of your own in the comments here ^_^

Let’s start with the expected number of

The probability that any given square

Now (using linearity of expectation) we compute

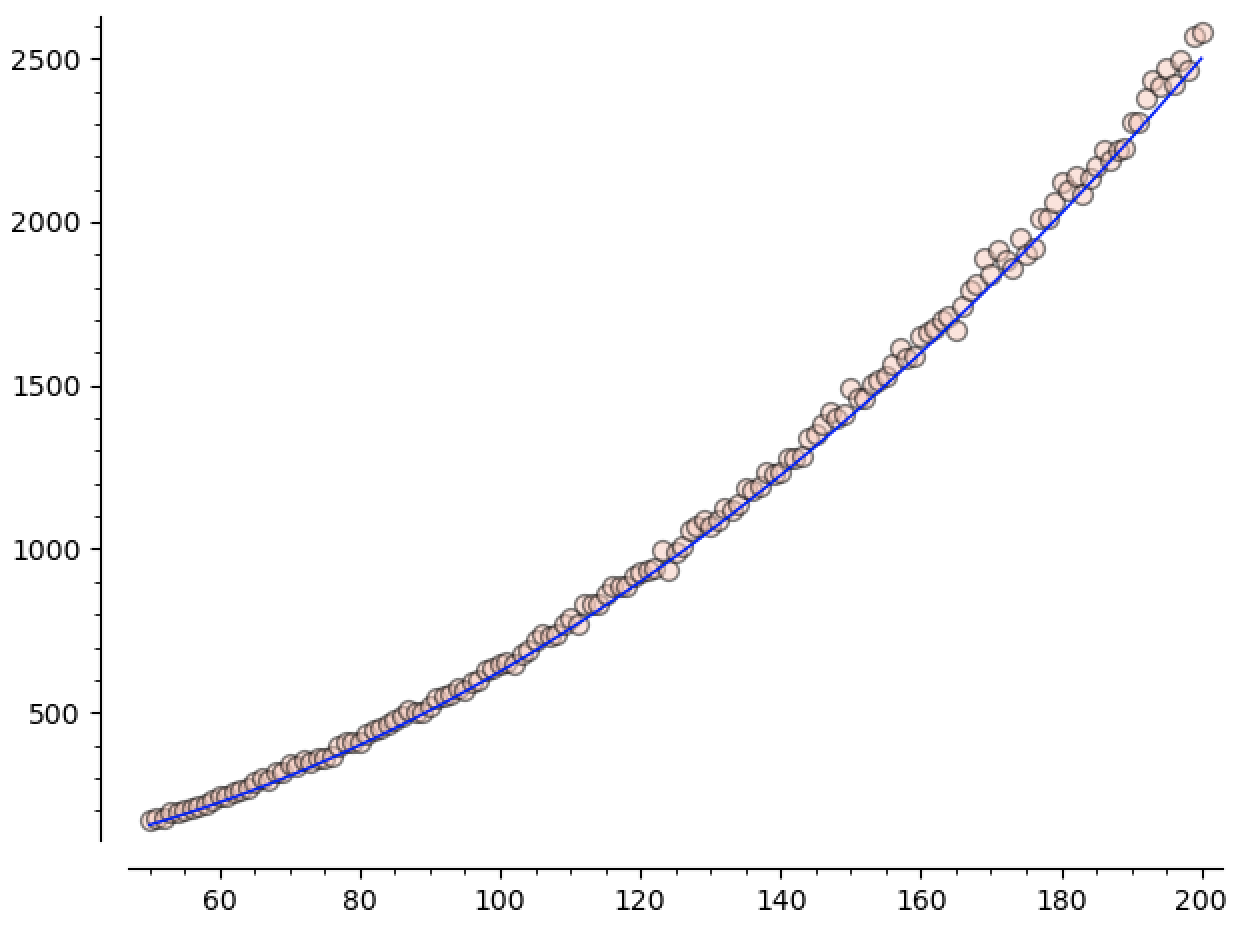

Of course, we can also code this up and check! Here is some code that

- makes and draws Hitomezashi graphs

- builds a random graph of size

- runs this 50 times for boards of size 50 to 200, keeping track of the

average number of singletons for each choice of

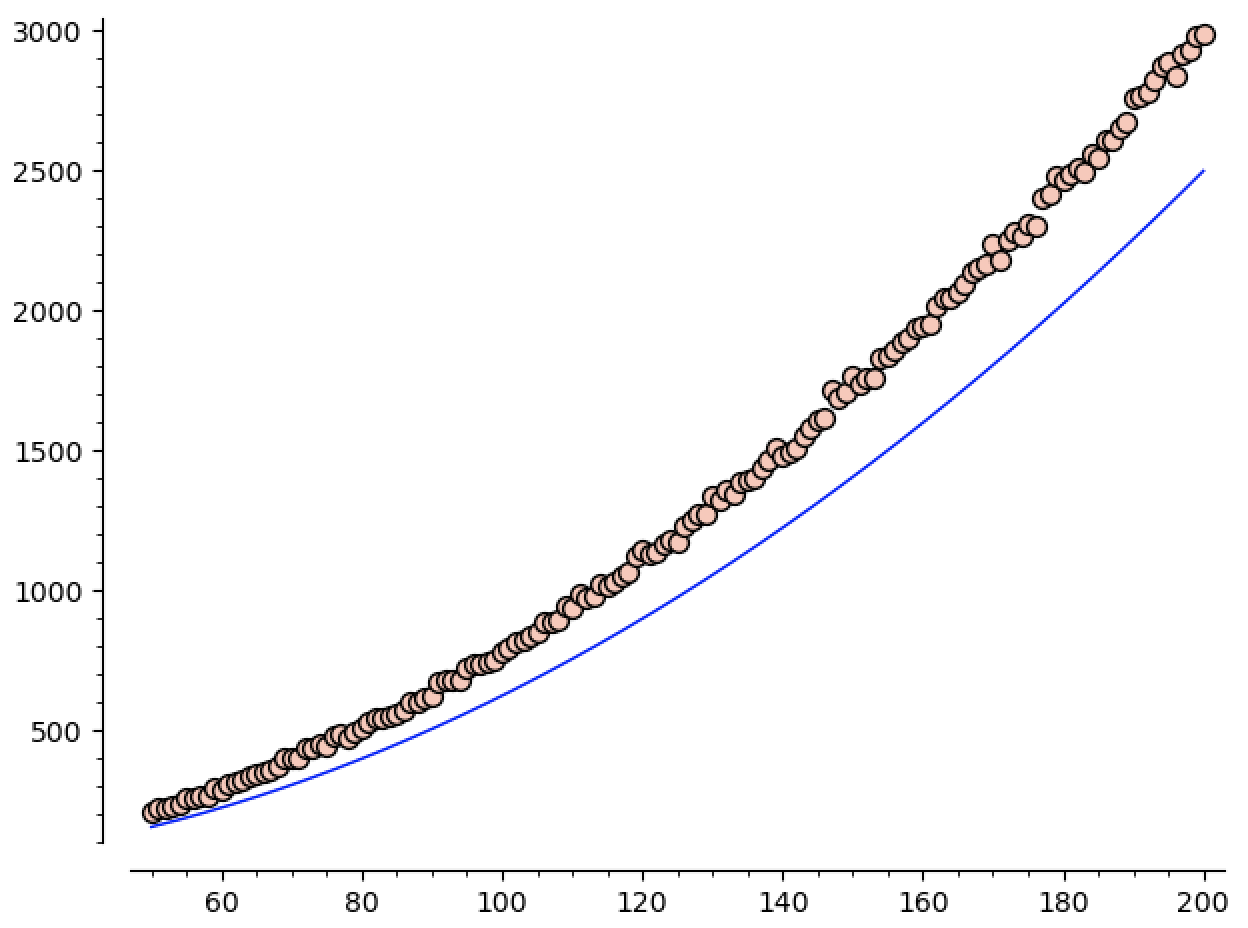

xxxxxxxxxxdef mkHitomezashi(s1,s2): """ Make a hitomezashi graph based on @s1 and @s2 """ m = len(s1) n = len(s2) vs = [(x,y) for x in range(m) for y in range(n)] es = [] for x in range(m): for y in range(n): if x+1 < m and y%2 != s1[x+1]: es += [((x,y),(x+1,y))] if y+1 < n and x%2 != s2[y+1]: es += [((x,y),(x,y+1))] return Graph([vs,es], format='vertices_and_edges')def drawHitomezashi(G): """ Draw the hitomezashi graph using the veretex names as positions """ pos = {} for v in G.vertices(): pos[v] = v return G.graphplot(pos=pos)def runTest(n,p=None,q=None): """ Build a random @n by @n graph, and get some stats @p is the probability of getting a 0 in the rows @q is the probability of getting a 0 in the columns """ if p == None: p = 0.5 if q == None: q = 0.5 s1 = [int(random() < p) for blah in range(n)] s2 = [int(random() < q) for blah in range(n)] G = mkHitomezashi(s1,s2) # This is a list of the sizes of the connected components, # in decreasing order of size. return G.connected_components_sizes()def getNumSingletons(n, N, p=None, q=None): """ get the mean and std dev number of singletons run @N many tests on graphs of size @n """ numSingletons = [] for _ in range(N): ccSizes = runTest(n,p,q) numSingletons += [ccSizes.count(1)] return (mean(numSingletons), sqrt(variance(numSingletons)))def plotNumSingletons(n0,n1,N=None,p=None,q=None): """ Run @N trials on sizes @n0 to @n1 and plot/return the data """ if N == None: N = 100 data = [] for n in range(n0,n1+1): print(n) (avg,std) = getNumSingletons(n,N,p,q) data += [(n, avg.n())] show(scatter_plot(data) + plot(x^2/16, x, n0,n 1)) return data# plotNumSingletons(50,200,50)Here you can see the data plotted against

Of course, we can do a similar analysis to see that there are going to be

As a (fun?) exercise, how many plus-shapes do we expect to get in

a random

Keep in mind that the existence of certain walls will no longer be independent.

Now let’s move on to the number of regions, and the largest region size.

We just computed that there’s

Again, we can write some code to test this out:

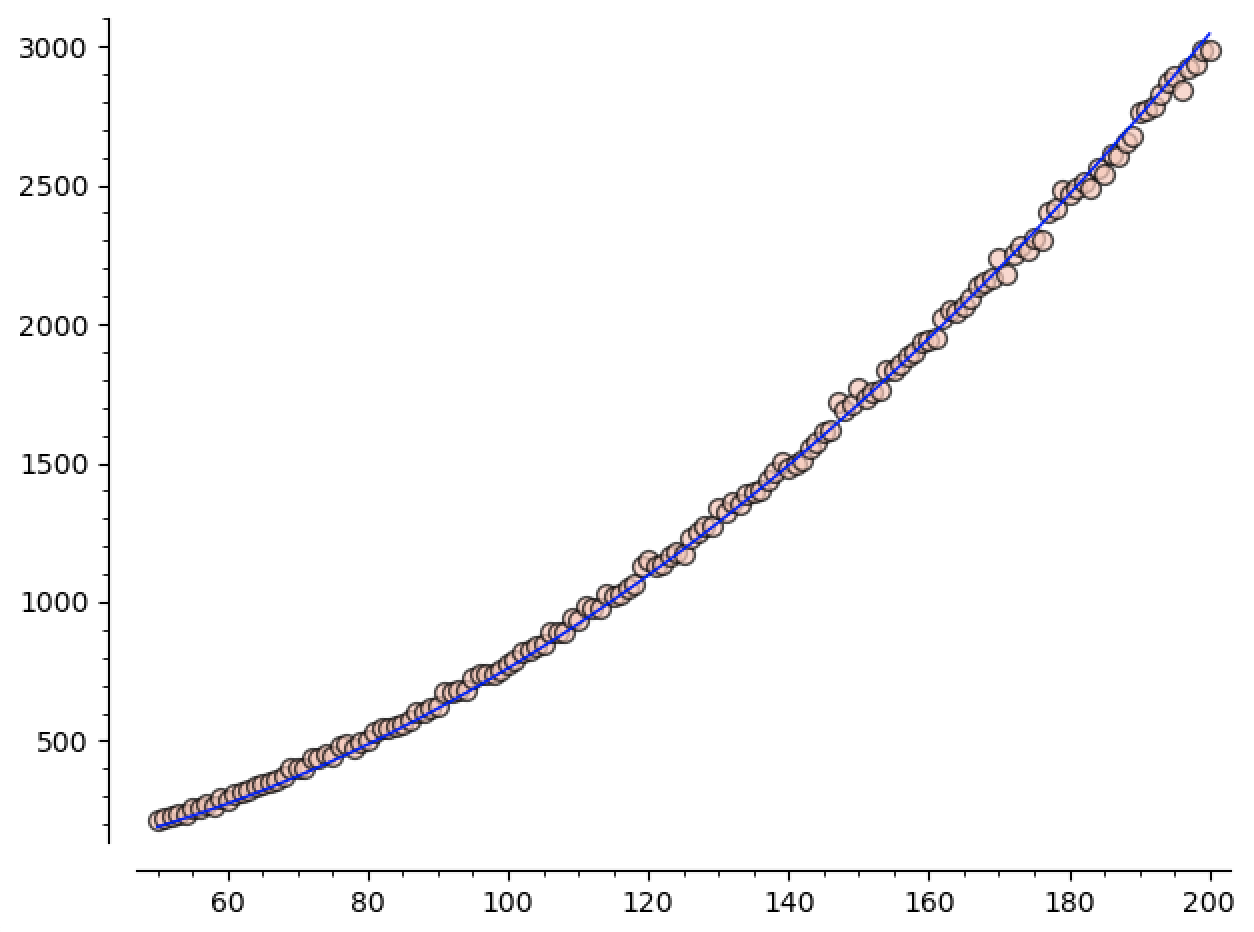

xxxxxxxxxxdef getNumCCs(n, N, p=None, q=None): """ get the mean and std dev number of CC (connected components) run @N many tests on graphs of size @n """ numCCs = [] for _ in range(N): ccSizes = runTest(n,p,q) numCCs += [len(ccSizes)] return (mean(numCCs), sqrt(variance(numCCs)))def plotNumCCs(n0, n1, N=None, p=None, q=None): """ Run @N trials on sizes @n0 to @n1 and plot/return the data """ if N == None: N = 100 data = [] for n in range(n0, n1+1): print(n) (avg,std) = getNumCCs(n,N,p,q) data += [(n, avg.n())] show(scatter_plot(data)) return data# CCData = plotNumCCs(50,200,50)Here you can see the number of regions plotted against

We know that we expect the number of regions to grow like

xxxxxxxxxxa = var('a')model(n) = a*n^2# this numerically finds coefficients for the model# to make it best match the data somehow. # I'm not enough of a statistician to say moresol = find_fit(CCData, model)guess(n) = model(a=sol[0].rhs())When we run this, we get

looks pretty good when we plot it against our data:

Since

We can check how good this model is, both inside and outside the range of data we used to define it, by running a few more tests.

For instance, running

That’s pretty good! But how well does

-

-

Let’s push our luck! Sage tells us

I’m definitely calling these a “win” for the formula!

They’re drifting apart, and I’m sure that’s because the

model isn’t completely right. The standard deviation for how many

regions we get is also growing as a function of

Regardless, this brings me to the first

Conjecture:

- (Easy?):

The expected number of regions in an

- (Hard?):

Pin down the constant in the expected number of regions

Again, I’m not a probabilistic combinatorialist, so these sorts of questions are a bit outside my wheelhouse. I can’t tell if they’re either quite simple or almost impossible, but I know I (unfortunately) have other things to work on3 and can’t spend any more time on this. Hopefully one of you will be able to tackle these! ^_^

Lastly, the maximum region size.

Let’s say we know that there are

Assuming the easy conjecture from the previous section (

This is better than nothing, but the size of the largest reason seems

to be growing with

We can do a bit better, though. We know that most of these regions are singletons! So the other regions are going to have to be bigger to compensate.

As a (fun?) exercise, can you lower bound the size of the largest region using this idea?

I’ll leave my attempt in a foothote4

Since I don’t have any more ideas for trying to solve this problem, let’s at least get some data and make a conjecture!

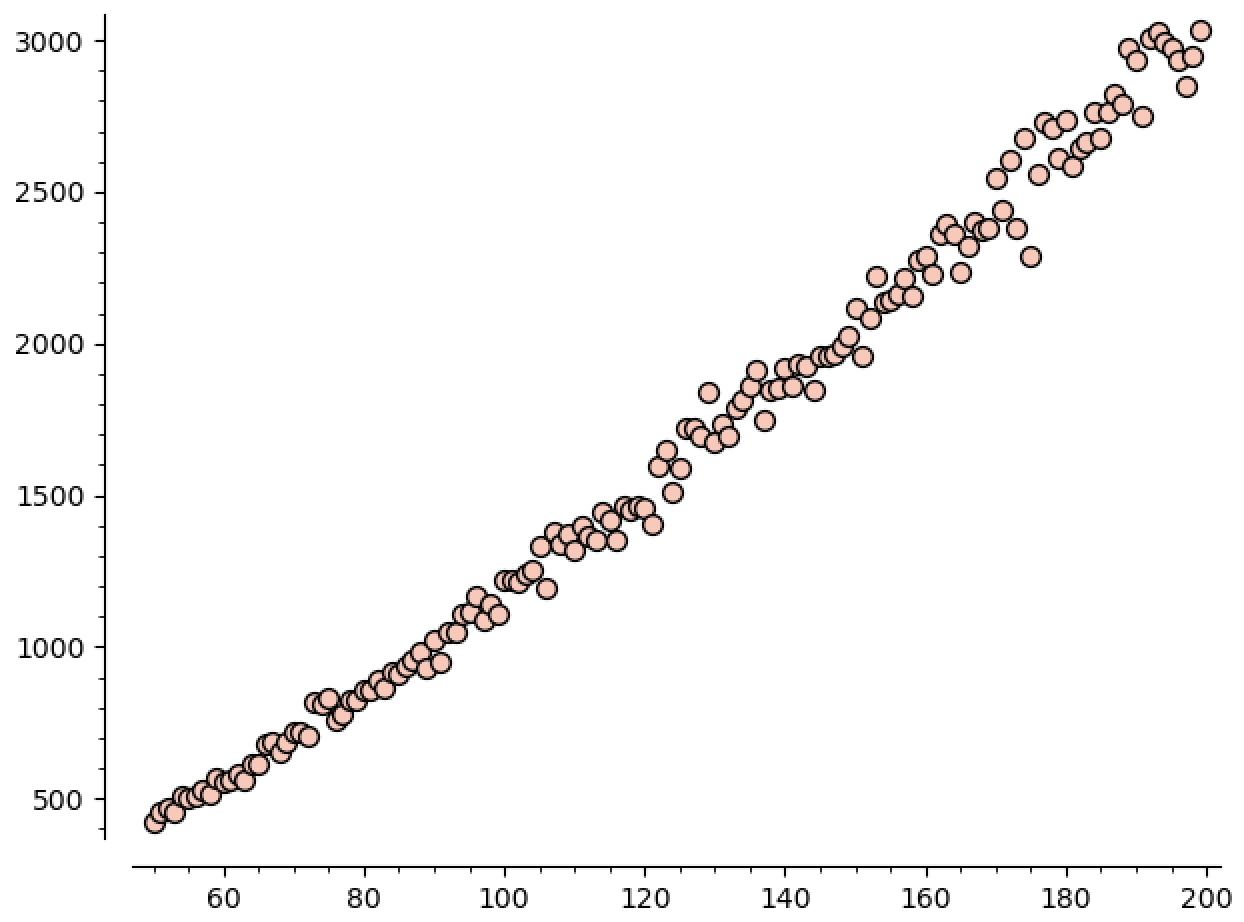

xxxxxxxxxxdef getMaxCCs(n, N, p=None, q=None): """ get the mean and std dev size of the largest CC run @N many tests on graphs of size @n """ maxCCs = [] for k in range(N): print("Running test ", k, "/", N) ccSizes = runTest(n,p,q) maxCCs += [ccSizes[0]] return (mean(maxCCs), sqrt(variance(maxCCs)))def runMaxCCTest(n0,n1,N,p=None,q=None): out = [] for n in range(n0,n1+1): (m,s) = getMaxCCs(n,N,p,q) out += [(n,m.n())] return out# maxCCData = runMaxCCTest(50,200,50)If we plot this data, we can see how the size of the largest

region is growing with

This plot really embarrasses the lower bound of

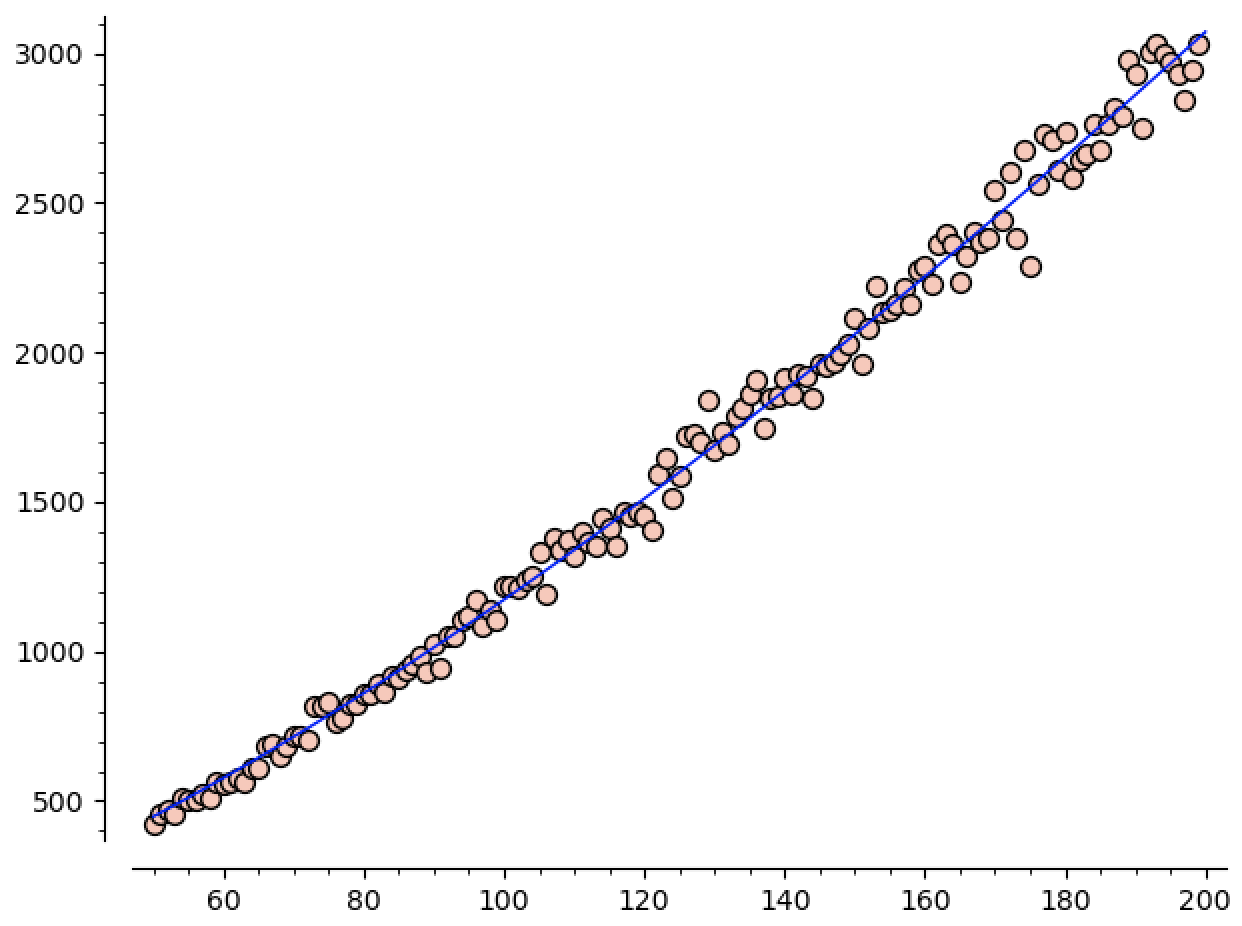

xxxxxxxxxxa = var('a') b = var('b') model(n) = a * n^b sol = find_fit(maxCCData, model) guess(n) = model(a=sol[0].rhs(), b=sol[1].rhs()) # this makes guess(n) = 1.9573061548255626*n^1.3888112924129952Now we see the guess is

Plotting this against our data gives

You can tell the standard deviation is higher here. Especially

as

-

-

-

If we push our luck again and look at

-

How far can we push things? If we run

This data then leads to a new series of conjectures

Conjecture:

- (Easy?) Can we show the expected size of the biggest region is

- (Medium?) What about

- (Hard?) Can we show that it is

- (Extremely Hard?) What’s the coefficient in front? We expect it’s probably

I think this kind of use case for sage is really powerful! It’s a great tool not only for solving concrete problems that arise in research, but also for formulating new conjectures and playing around with lots of concrete examples at once! Hopefully this showcases another angle on how we can use sage in the wild to work on our research problems.

Also, again, if anyone makes any progress on these, please let me know! I would love to know anything you’re able to figure out ^_^

-

A huge thanks to Brandon Curtis for the blog of sage resources! In particular the model fitting example here was super helpful. ↩

-

Thankfully one of my best friends is a statistician. He’s been a huge help for this whole process, and you can find him on twitch ↩

-

I really want to start writing music so that I’ll have something by the end of the year. Also there’s the upcoming posts on fourier analysis, etc. ↩

-

We know that there are

So the average nonsingleton region has

Assuming the conjecture

I don’t think this approach can be saved, either. We know the expected number of singletons, so the numerator can only be

We could also try including more small regions, say by counting plus signs as well as singletons. But this would just improve the constant (do you see why?). What we would need to know is that there’s a lot of “small” regions where “small” now isn’t a constant, but depends on