A Follow Up -- Explicit Error Bounds

05 Jan 2022 - Tags: quick-analysis-tricks , sage

In my previous post I talked about how we can use probability theory to make certain scary looking limits obvious. I mentioned that I would be writing a follow up post actually doing one of the derivations formally, and for once in my life I’m going to get to it quickly! Since I still consider myself a bit of a computer scientist, it’s important to know the rate at which limits converge, and so we’ll keep track of our error bounds throughout the computation. Let’s get to it!

Let

In the interest of exposition, I’ll show far more detail than I think is required for the problem. Hopefully this shows how you might come up with each step yourself.

Let

Now we compute

Now we use an extremely useful trick in measure theory! We’ll break up this integral into two parts. One part where the integrand behaves well, and one part (of small measure) where the integrand behaves badly.

Using our intuition that

We’ll be able to control the first part since there our integrand is

So we split our integral:

Now since

Since

Putting these estimates into the above integral, we find1

since we can pull the constants out of the integral, then get the measures of the “good” and “bad” sets.

Now the good set has measure at most

So plugging back into our integral, we get

We want to show that this goes to

We can choose any

If we pick

and a tedious L’hospital rule computation shows that this is eventually less

than

So what have we done?

We started with

and we showed this is at most

So, overall, we have

as promised.

After I got through with this proof, I wanted to see if it agreed with some

simple experiments. The last step of this, where we played around with the

So then, let’s try to approximate some functions! I’ve precomputed these examples, but you can play around with the code at the bottom if you want to.

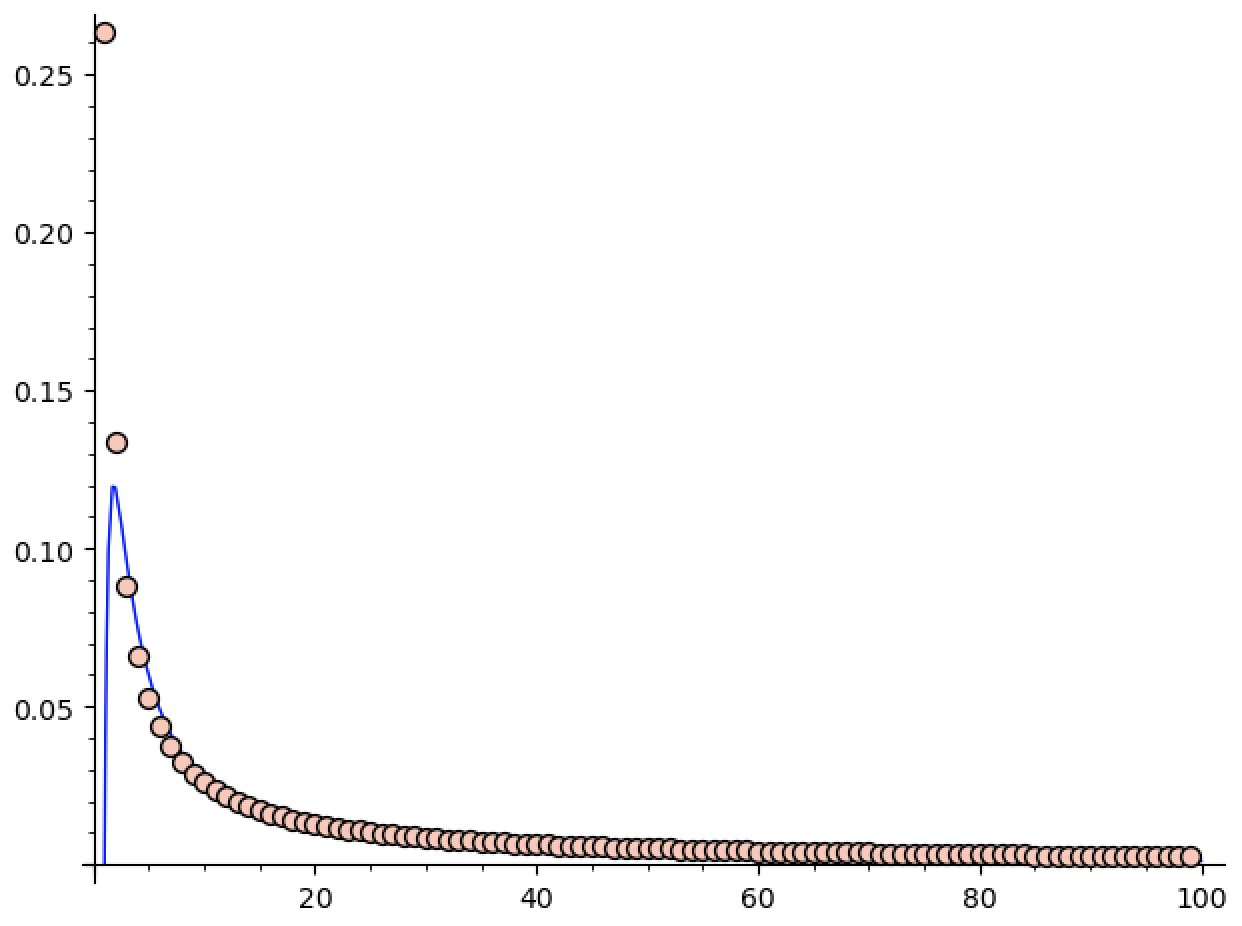

First, let’s try to approximate

(Edit: I realized I never said what these pictures represent. The

That’s fine, though. It’s more than possible that I was sloppy in this

derivation, or that tighter error bounds are possible

(especially because

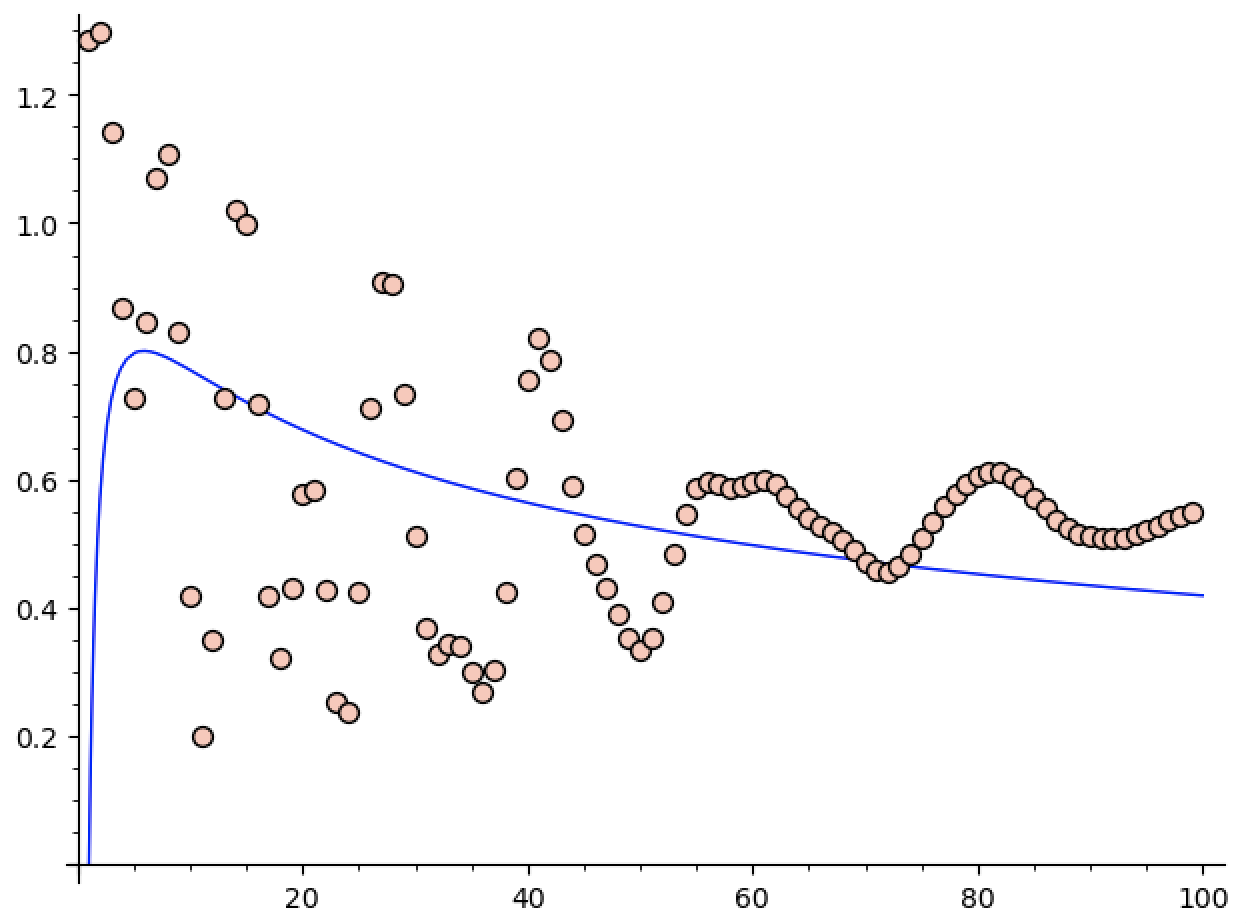

So let’s try it on a more difficult function4. What if we try to

approximate

Now we get some oscillatory behavior, which seems to be stabilizing.

At least the guess in this case is closer to what we computed, as the

blue graph is roughly

The fact that this example gives something fairly close to the bounds we

ended up getting makes me feel better about things. In fact, after a bit

of playing around, I can’t find anything where sage guesses

the decay is slower than

If you want to play around for yourself, I’ve left the sage code below!

xxxxxxxxxxdef approx(f,x,n, verbose=False): """ Compute the nth approximation of f at x according to our formula """ total = 0 # We're only summing the first 500 terms of the series, # and that causes some distortion for large values of n for k in range(500): total += (exp(-n*x) * ((n*x)^k / factorial(k)) * f(x=k/n)).n() return total.n()def test(f, N=100, x=None, showPlot=False): """ Get the error between f(x) and approx(f,x,n) as n goes from 1 to @N. If no x is given, pick one randomly in (-1,1). Return the error data, and plot it if @showPlot is true """ if x == None: x = RR(uniform(-1,1)) target = f(x=x).n() data = [] n = 1 while n < N: a = approx(f,x,n) data += [ (n, abs(a - target)) ] n += 1 a,b,n = var('a,b,n') model(n) = a * n^b * log(n) sol = find_fit(data,model) guess(n) = model(a=sol[0].rhs(), b=sol[1].rhs()) show(guess) if showPlot: scatterPlot = scatter_plot(data) guessPlot = plot(guess, n, 1, N) show(scatterPlot + guessPlot) return (x, guess, data)def _(f=sin(x), x=3/4, auto_update=False): _ = test(f, x=x, showPlot=True)I’m pretty confident in what we’ve done today, but I can’t help but feel like there’s a better way, or that there’s slightly tighter bounds to be had. If there’s anyone particularly skilled in “hard” analysis reading this, I would love to hear your thoughts ^_^.

In any case, this has been fun to work through! I’ll see you all soon!

-

In the interest of continuing the quick analysis trick tradition of saying the obvious thing, notice what we’ve done here. We want to control an integral. How do we do it?

We break it up into a “good” piece, which is big, and a “bad” piece, which is small.

The “good” piece we can control because it’s good. For us, this means

The “bad” piece we can’t control in this way. Indeed it’s defined to be the piece that we can’t control in this way. Thankfully, if all is right with the world, the “bad” piece will be small! So we get some crummy (uniform) estimate on the bad piece, and then multiply by the size of the piece. Since the size is going to

-

Notice just like we were content with a crummy bound on the integrand of the bad piece because we’re controlling the measure, we’re content with a potentially crummy bound on the measure of the good piece because we’re controlling the integrand!

Of course, for our particular case,

-

In fact,

is horrendous. ↩

-

Interestingly, while trying to break things, I realized that I don’t really have a good understanding of “pathological” bounded lipschitz functions. Really the only tool in my belt is to make things highly oscillatory…

Obviously we won’t have anything too pathological. After all, every (globally) lipschitz function is differentiable almost everywhere, and boundedness means we can’t just throw singularities around to cause havoc. But is oscillation really the only pathology left? It feels like there should be grosser things around, but it might be that they’re just hard to write down.

Again, if you have any ideas about this, I would also love to hear about it! ↩