Measure Theory and Differentiation (Part 2)

31 Aug 2021 - Tags: measure-theory-and-differentiation , analysis-qual-prep

This post has been sitting in my drafts since Feb 22, and has been mostly done for a long time. But, with my upcoming analysis qual, I’ve finally been spurred into finishing it. My plan is to put up a new blog post every day this week, each going through some aspect of the analysis that’s going to be on the qual. Selfishly, this will be great for my own preparation (I definitely learn through teaching) but hopefully this will also help future students who want to see a motivated treatment of the standard analysis curriculum.

The first half of this post is available here, as well as at the measure theory and differentiation tag. I’m also going to make a new tag for this series of analysis qual prep posts, and I’ll retroactively add part 1 to that tag.

With that out of the way, let’s get to the content!

In part 1 we talked about two ways of

associating (regular, borel) measures to functions on

-

To an increasing, right continuous

-

To a positive, locally

Perhaps surprisingly, we can go the other way too!

-

Given a measure

-

Given a measure

These facts together give us a correspondence

You should think of the increasing, right-continuous functions

As an exercise to recap what we did in the last post, prove that

every monotone function

This result can be proven without the machinery of measure theory (see, for instance, Botsko’s An Elementary Proof of Lebesgue’s Differentiation Theorem), but the proof is much more delicate, and certainly less conceptually obvious. Also, some sort of machinery seems to be required. See here, for instance.

This should feel somewhat restrictive, though. There’s more to life than increasing, right continuous functions, and it would be a shame if all this machinery were limited to functions of such a specific form. Can we push these techniques further, and ideally get something that works for a large class of functions? Moreover, can we use these techniques to prove interesting theorems about this class of functions? Obviously I wouldn’t be writing this post if the answer were “no”, so let’s see how to proceed!

Differentiation is a nice motivation, but integration is theoretically much simpler. We can’t expect to be able to differentiate most functions, but it is reasonable to want to integrate them. With this in mind, rather than trying to guess the class of functions we’ll be able to differentiate, let’s try to guess the class of functions we’ll be able to integrate. Then we can work backwards to figure out what we can differentiate.

Previously we were restricting ourselves to positive locally

The correspondence says to take

If we meditate on what properties

A Complex Measure on a

Notice

⚠ Be careful! Now that our measures allow nonpositive values, we might

“accidentally” have

Because of this, we redefine the notion of nullset to be more restrictive:

We say

As an exercise, can you come up with a concrete signed measure

As another exercise, why does this agree with our original definition of nullsets when we restrict to positive measures?

Now, we could try to build measure theory entirely from scratch in this setting. But it seems like a waste, since we’ve already done so much measure theory already… It would be nice if there were a way to relate signed measures to ordinary (unsigned) measures and leverage our previous results!

We know that

So we should expect

and the Jordan Decomposition Theorem says5 that we can decompose

every complex measure

Formally, it says that every complex measure

It can still be nice to work with an unsigned measure directly sometimes,

rather than having to split our measure into

There is a positive measure

This possesses all the amenities the notation suggests, including:

- (Triangle Inequality)

- (Operator Inequality)

- (Continuity)

In fact, the collection of complex measures on

Ok. This has been a lot of information. How do we actually compute with a complex measure? Thankfully, the answer is easy: We use the Jordan Decomposition. We define

In particular, in order to make sense of this integral, we need to know

that

As an easy exercise, show that the dominated convergence theorem is true

when we’re integrating against

We can split up

Show using the definition of

So, as a quick example computation:

Notice, as usual, that once we’ve phrased the integral in terms of

Now, that example might have felt overly simplistic. After all, it was

mainly a matter of moving the

Remember last time, we had a structure theorem that told us every measure is

of the form

Lebesgue-Radon-Nikodym Theorem

If

for a

As in the unsigned case, we write

I realized while writing this post that last time I forgot to mention an important aspect of the Radon-Nikodym derivative! It satisfies the obvious laws you would expect a “derivative” to satisfy7. For instance:

- (Linearity)

- (Chain Rule) If

It’s worth proving each of these. The second is harder than the first, but it’s not too bad.

At last, we have a complex measure theoretic notion of “derivative”, as well as half of the correspondence we’re trying to generalize:

Given an

But which functions will generalize the increasing right continuous ones? The answer is Functions of Bounded Variation!

To see why bounded variation functions are the right things to look at,

let’s remember how the correspondence went in the unsigned case:

We took an unsigned measure

Now, for a complex measure

Then if we look at the real part, we would be looking at functions

Of course, nothing in life is so simple, and for what I assume are historical reasons, this is not the definition you’re likely to see (despite it being equivalent).

The more common definition of bounded variation is slightly technical,

and is best looked up in a reference like Folland. The idea, though, is

that

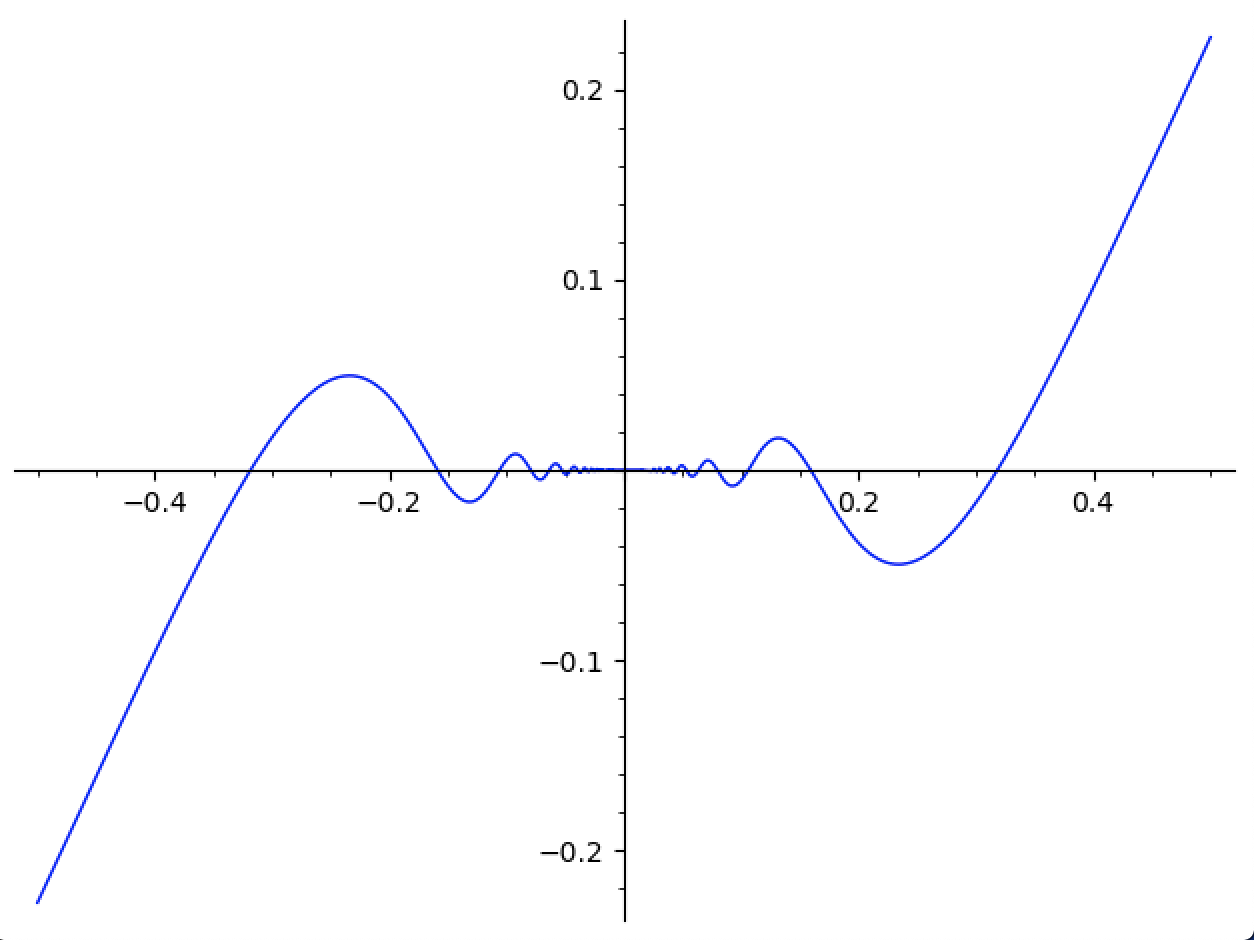

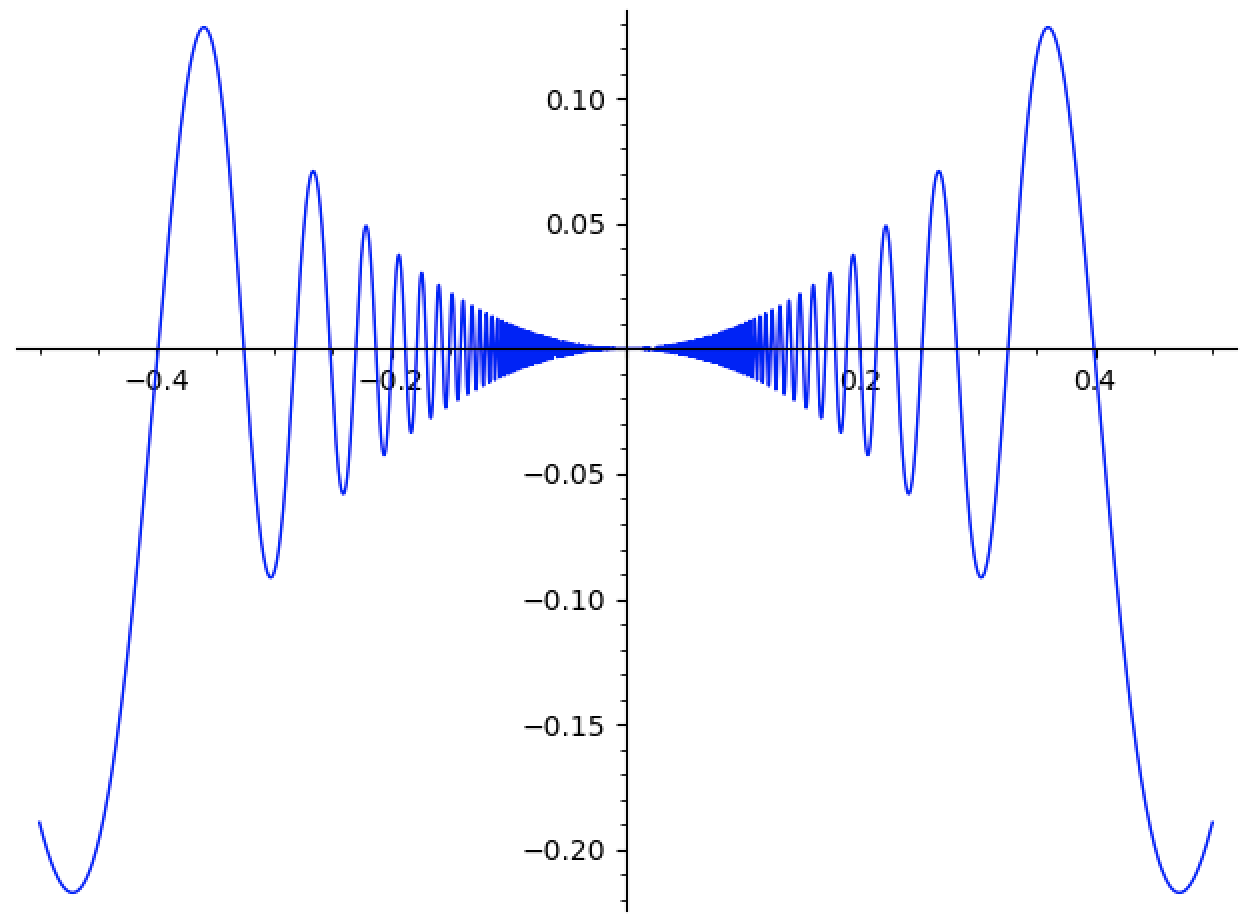

For instance,

Even though

whose wiggle-density is obviously less controlled9.

We also get some results that will be familiar from the last post. These are akin to the properties of monotone functions that relate them to increasing right continuous functions, and thus measures.

- If

- If

Lastly, if

This brings us to our punchline!

If

is normalized bounded variation.

Conversely, if

So we have correspondences:

Here,

In fact, the class of functions

Lebesgue Fundamental Theorem of Calculus

The Following Are Equivalent for a function

As one last exercise for the road, you should use this machinery to prove Rademacher’s Theorem:

If

-

The idea is to show that

-

It’s easy to wonder what complex measures are good for. One justification is the one we’re giving in this post: they provide a clean way of extending the fundamental theorem of calculus to a broader class of functions.

There’s actually much more to say, though. Complex measures are linear functionals, where we take a function

This is great, because it means we can bring measure theory to bear on problems in pure functional analysis. As one quick corollary, this lets us transfer the dominated convergence theorem to the setting of functionals. Of course, without the knowledge of complex measures, we wouldn’t be able to talk about this, since most functionals are complex valued!

At this point, the category theorist in me needs to mention a cute result from Emily Riehl’s Category Theory in Context. There are two functors

It seems reasonable to me that there would also be a natural isomorphism between the complex dual of the functions vanishing at infinity and the space of compelx valued measures, but I can’t find a reference and I’m feeling too lazy to work it out myself right now… Maybe one day a kind reader will leave a comment letting me know? ↩

-

By the Riemann Rearrangement Theorem. ↩

-

In fact, since

-

Really it’s a statement about real valued signed measures, and so it allows for either

I went back and forth for a long time on whether to include a discussion about signed measures in this post. Eventually, I decided it made the post too long, and it encouraged me to include details that obscure the main points. I want these posts to show the forest rather than the trees, and here we are. ↩

-

Notice for us

With this generality, we can know for sure that we can work in any space which admits an effectively computable (

Any space with a computable notion of integration also admits a computable notion of integrating complex measures by application of Radon-Nikodym! ↩

-

If you’re familiar with product measures, there’s actually a useful fact:

If

This is great, since it means to integrate against some product measure on

-

Mathematicians like to use words like “variation” and “oscillation” rather than “wiggliness”. I can’t imagine why. ↩

-

Another way of viewing the issue (particularly in light of the upcoming theorem) is that

-

There is actually a characterization of

-

For this, it might be useful to look up the more technical definitions of “bounded variation” and “absolutely continuous” that I’ve omitted in this post. Both cam be found in Chapter